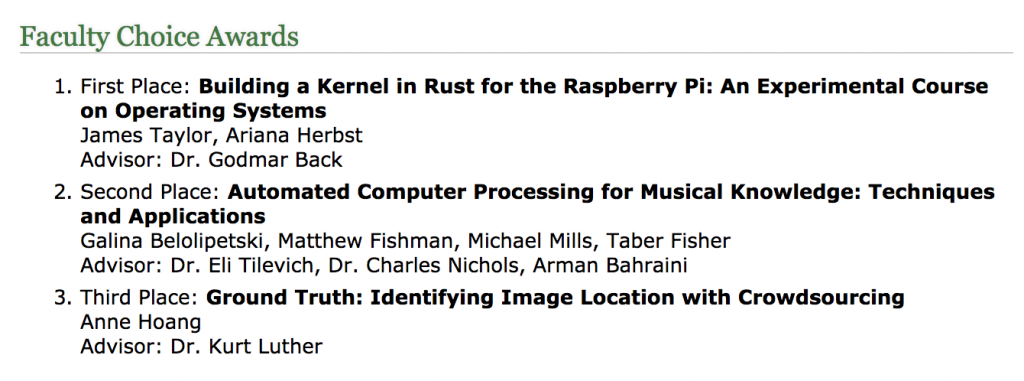

Dr. Luther gave an invited presentation, titled “Solving Photo Mysteries with Expert-Led Crowdsourcing,” at the University of Washington’s DUB (Design, Use, Build) Seminar on February 27. Here is the abstract for the presentation:

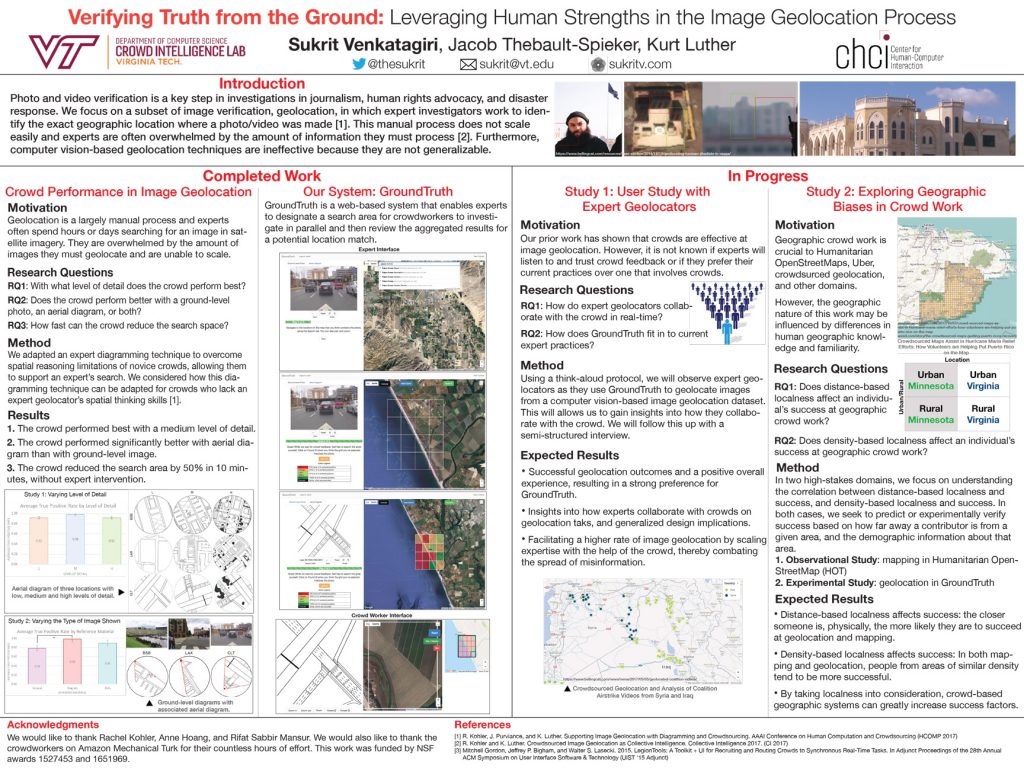

Investigators in domains such as journalism, military intelligence, and human rights advocacy frequently analyze photographs of questionable or unknown provenance. These photos can provide invaluable leads and evidence, but even experts must invest significant time in each analysis, with no guarantee of success. Crowdsourcing, with its affordances for scalability and parallelization, has great potential to augment expert performance, but little is known about how crowds might fit into photo analysts’ complex workflows. In this talk, I present my group’s research with two communities: open-source investigators who geolocate and verify social media photos, and antiquarians who identify unknown persons in 19th-century portrait photography. Informed by qualitative studies of current practice, we developed a novel approach, expert-led crowdsourcing, that combines the complementary strengths of experts and crowds to solve photo mysteries. We built two software tools based on this approach, GroundTruth and Photo Sleuth, and evaluated them with real experts. I conclude by discussing some broader takeaways for crowdsourced investigations, sensemaking, and image analysis.